Organisations across diverse sectors are investing in AI-powered solutions to enhance efficiency, improve customer experiences, and gain a competitive edge. Yet, many encounter significant anxiety when contemplating regulatory compliance — particularly with respect to the newly enacted EU AI Act and the EU General Data Protection Regulation (GDPR). The threat of legal repercussions and reputational damage can overshadow the benefits of technological innovation.

Both the EU AI Act and the GDPR seek to protect individuals’ rights and well-being, albeit through different mechanisms. Adhering to these regulations can present challenges, especially to entities with limited experience in regulatory compliance. Companies need a structured approach to aligning with both regimes, but what’s the best way to go about it?

Overview of the EU AI Act

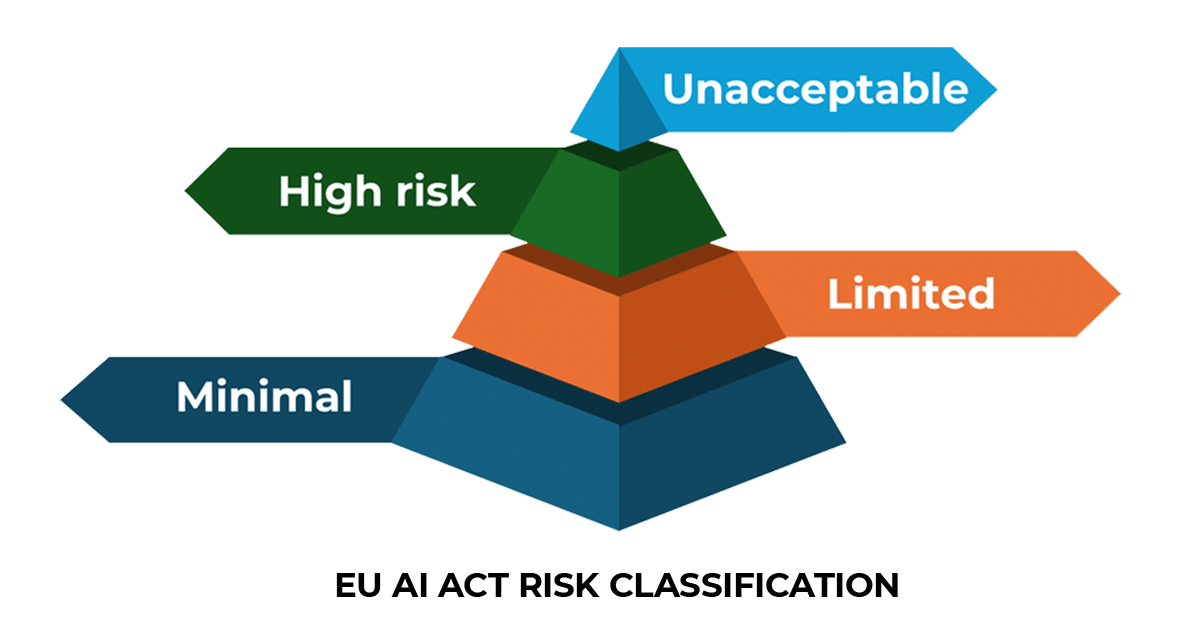

The EU AI Act has come into force to address the diverse risks associated with artificial intelligence and to safeguard individuals’ rights, safety, and well-being. It establishes a comprehensive framework ensuring that AI systems developed or deployed within the European Union align with ethical guidelines and comply with transparency, accountability, and oversight measures. In particular, the Act adopts a risk-based approach that imposes rigorous obligations on high-risk applications, while permitting a lighter compliance burden for lower-risk systems.

The four tiers of risk

The EU AI Act classifies AI systems according to four tiers of risk:

High-risk systems — commonly used in critical sectors such as healthcare or recruitment — face strict requirements on documentation, transparency, safety, and human oversight. The assigned risk category thus determines the depth and breadth of compliance measures, emphasising the Act’s overarching goal of fostering trustworthy, ethically sound AI technologies.

Key stakeholders and roles

Under this legislation, entities that engage with AI solutions must assume specific roles and responsibilities.

- A producer designs and develops AI systems for commercial or public use.

- An importer brings such systems into the EU market from non-EU countries, ensuring they meet the Act’s requirements before distribution.

- A distributor makes the systems available to end-users or further intermediaries, verifying that the systems bear the requisite conformity markings and documentation.

- A deployer puts an AI system into service and must guarantee that it is used in accordance with its intended purpose.

- A manufacturer typically handles the physical production aspects of AI-integrated devices or solutions, bearing the duty to meet technical and labelling requirements.

Each of these roles is subject to specific obligations, from maintaining technical files to implementing corrective actions if non-compliance arises.

GDPR fundamentals

The General Data Protection Regulation was introduced to strengthen the protection of personal data within the European Union and to consolidate a fragmented legal environment into a single, harmonised framework. Since its enforcement in May 2018, the GDPR has elevated the global standard for data privacy, influencing legislation beyond the EU’s borders. Any organisation — whether a multinational corporation or a smaller venture — that processes personal data of EU residents must adhere to these regulations, regardless of its geographic location.

To learn more about the GDPR, see this article: What is GDPR?

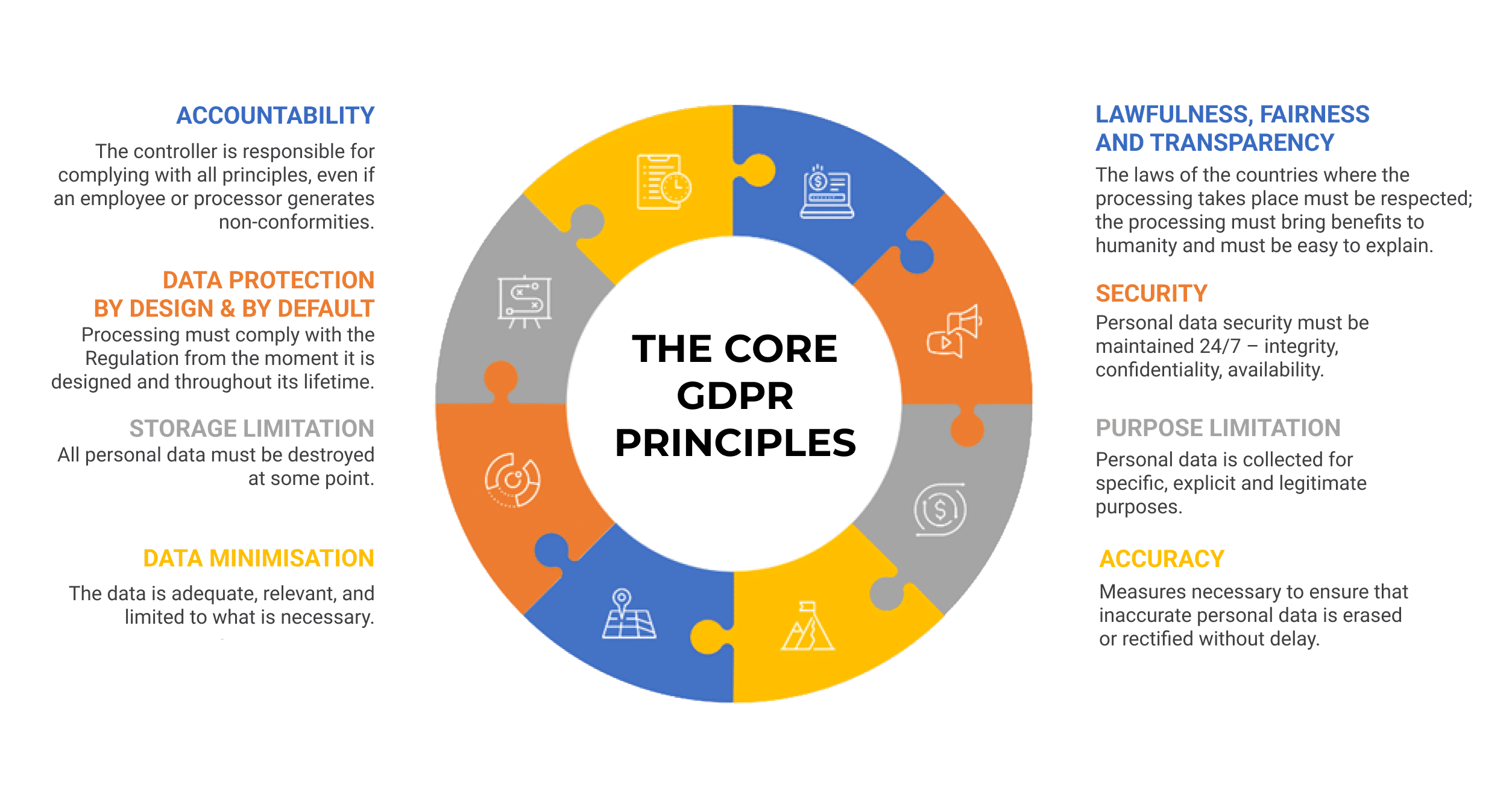

The core GDPR principles

These core principles are key to ensuring the protection and privacy of personal data:

These principles collectively ensure that personal data are handled with the utmost care and respect for individuals’ privacy rights. To learn more, check out Understanding 6 key GDPR principles.

Key stakeholders and roles

Under the GDPR, entities involved in data processing assume specific roles. A data controller determines the purposes and means of processing personal data, bearing the primary responsibility for ensuring compliance and communicating with data subjects. A data processor acts on the controller’s instructions, handling data in line with established contractual obligations. Understanding these roles is essential when deploying AI solutions, as different stakeholders — such as AI developers, service providers, and end-users — may share distinct obligations depending on their functions and activities.

You can learn more about the roles involved in data processing under the GDPR in this article: EU GDPR controller vs. processor – What are the differences?

Comparison of the EU AI Act and the GDPR

The following table outlines key aspects and intersections between the EU AI Act and the GDPR, highlighting areas of overlap and complementarity.

| Aspect | EU AI Act | GDPR | Similarities / Interplay |

| Purpose | Regulates AI-specific risks and ensures safe, ethical deployment of AI | Governs the processing of personal data and ensures data protection | Both aim to safeguard fundamental rights and promote risk-based, accountable practices |

| Scope | Applies to AI systems, especially high-risk ones | Applies to all personal data processing activities | Overlap occurs when AI systems process personal data, requiring dual compliance |

| Risk Management | Requires risk assessments for AI harms like bias or safety failures | Requires Data Protection Impact Assessments (DPIAs) for high-risk data processing | Risk assessments can be combined to address ethical, legal, and technical concerns |

| Transparency & Accountability | Mandates transparency in AI operations and human oversight | Requires lawful processing and data subject rights (e.g., access, correction) | Both promote explainability, auditability, and traceability in system design |

| Data Security | Requires secure development, access controls for high-risk AI systems | Mandates measures like encryption and pseudonymisation | Both stress protection against unauthorised access and data misuse |

| Fundamental Rights Protection | Focuses on preventing discrimination, ensuring fairness in AI use | Emphasises dignity, privacy, and non-discrimination through lawful data use | Shared focus on non-discrimination, privacy, and human dignity |

| Applicability to AI Systems | Direct regulation of AI systems, especially in sensitive sectors | Indirect, applies when AI processes personal data | The GDPR complements the AI Act when AI uses or affects personal data |

| Compliance Strategy | Sector-specific conformity assessments for high-risk AI | Requires data controllers/processors to demonstrate compliance | Encourages integrated compliance frameworks to avoid duplicative efforts |

As you can see, there is a convergence between the EU AI Act and the GDPR, particularly in their shared emphasis on fundamental rights protection, transparency, and rigorous risk management. While the AI Act specifically addresses AI systems’ safety and ethical implications, the GDPR’s focus on personal data processing complements it, particularly in scenarios involving personal information.

Documenting data and AI usage

In the case of both of these frameworks, the documentation needs to start by mapping all data flows, whether personal or non-personal. Identify which data elements are collected, the reasons for collection, and whether they are used by AI processes. This initial step also includes designating whether AI systems might be high-risk under the EU AI Act:

- Maintain an inventory detailing data sources, data types, and storage durations.

- Classify AI systems according to their potential risk to individuals and society.

This documentation provides a foundation for subsequent compliance measures.

From an AI Act perspective, developing a detailed map of every dataset and model interaction lays the groundwork for correct risk classification, because it shows precisely whether a system falls into the minimal-, limited-, or high-risk tier and, by extension, which conformity procedure applies. The same mapping exercise feeds the Annex IV technical documentation file and informs the post-market monitoring plan that providers of high-risk systems must maintain. In addition, by capturing the full lineage of each dataset, the organisation creates the traceability record demanded by Articles 12 and 13, enabling authorities to follow the path from raw data through training and validation to the deployed model.

From a GDPR perspective, the very same inventory doubles as the Article 30 record of processing activities, providing evidence that personal data are collected for specified purposes only, and that no superfluous attributes are ingested, thereby demonstrating data-minimisation and purpose-limitation compliance. Because every data element is linked to an explicit purpose, the organisation can generate clear, accurate privacy notices, ensuring transparency for data subjects.

Practical compliance tips

Here are a few additional considerations that can aid in maintaining alignment with both regulations over time:

- Integrate compliance considerations at every stage of AI development. By weaving data protection and AI risk management into core processes, organisations can proactively prevent breaches, rather than merely reacting to them.

- Adopt a unified policy framework. Where feasible, merge your AI governance policies with GDPR data governance measures. This approach can reduce administrative overhead and strengthen the consistency of compliance efforts.

- Continuously monitor regulatory updates. Regulatory bodies may issue revised guidelines or clarifications that affect your compliance program. Staying abreast of these developments helps prevent unexpected compliance gaps.

- Use existing data protection infrastructure. Leverage data mapping tools, DPIA templates, and established privacy management software already in place for GDPR compliance. These can be adapted to fulfil AI Act requirements, particularly for risk assessment documentation.

- Engage multidisciplinary teams. Collaboration between legal, technical, compliance, and operational experts fosters a more holistic approach. Early engagement reduces the risk of discovering compliance shortfalls after a system has been developed and deployed.

- Demonstrate accountability through thorough documentation and open communication. Maintaining comprehensive records not only satisfies regulatory mandates, but also fosters trust among stakeholders, including regulators, clients, and data subjects.

Responsible AI adoption: Balancing innovation and ethical compliance

As AI-driven innovation accelerates, the interplay between the EU AI Act and the GDPR becomes a decisive factor for organisations striving to stay compliant. The EU AI Act imposes a structured framework to govern the ethical and safe deployment of AI, while the GDPR continues to mandate responsible handling of personal data. By utilising a structured approach to implementing both regulations in parallel, organisations can harness AI’s potential while respecting individuals’ rights and meeting the stringent demands of both the EU AI Act and the GDPR.

To implement GDPR requirements in your AI project, use our EU GDPR Premium Documentation Toolkit.

Tudor Galos

Tudor Galos