You’re probably wondering what exactly the text of the ISO 42001 standard says — actually, the standard is written in language that is hard to read, so in this article I’ll summarize the most important points from each ISO 42001 clause in an easy-to-understand way.

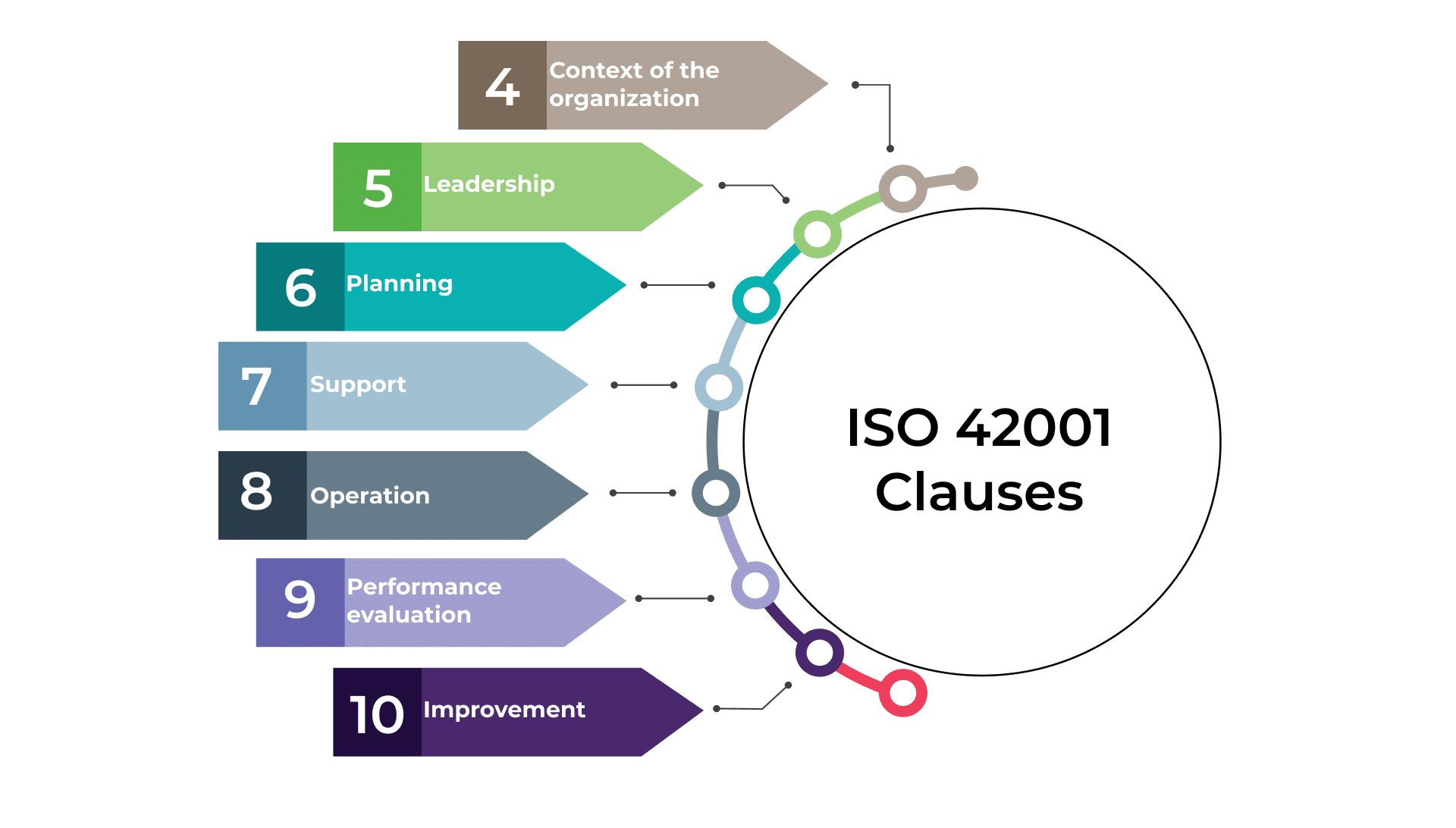

Like with other ISO management standards, the main clauses of ISO 42001 start with clause 4 Context of the organization and end with Clause 10 Improvement; it also has Annex A that provides requirements for AI controls, and Annex B with guidance on how those controls could be implemented.

ISO 42001 is aligned with the high-level structure (HLS) set by the International Organization for Standardization (ISO), which means that it has the same structure and roughly the same clause names as other standards, including ISO 27001, ISO 9001, ISO 14001, and ISO 22301.

Below you’ll see all clauses from ISO 42001.

Clauses 1 to 3

These clauses are not mandatory, and are not so important when companies want to comply with ISO 42001:

- Clause 1 Scope — Defines what this standard is focused on and that all kinds of companies can implement it.

- Clause 2 Normative references — Refers to ISO 22989 as a source for describing AI terms and concepts.

- Clause 3 Terms and definitions — Describes some key terms like interested parties, risk, process, etc.

The remaining clauses listed in this article are mandatory.

Clause 4 Context of the organization

Clause 4, called “Context of the organization,” requires analyzing internal and external factors or issues, identifying stakeholder expectations, and defining the scope of the AIMS.

It has four sub-clauses:

- 4.1 Understanding the organization and its context — The organization must understand its internal and external context, including its role in developing or using AI systems, legal and ethical factors, and even climate change, to ensure its AI Management System can achieve its objectives.

- 4.2 Understanding the needs and expectations of interested parties — The organization must identify all stakeholders relevant to its AI Management System, understand their needs, and decide which ones it will address through the AIMS.

- 4.3 Determining the scope of the AI management system — The organization must define and document what parts of its activities the AI Management System covers, based on internal and external factors and relevant requirements.

- 4.4 AI management system — The organization must create, run, improve, and document an AI Management System that meets ISO 42001 requirements and clearly defines how all its processes work together.

See also: ISO 42001 Checklist of Implementation Steps

Clause 5 Leadership

Clause 5, called “Leadership,” specifies what the senior management must do, as well as how to define roles and responsibilities and how to create the AI Policy that will provide direction for the AI efforts.

It has three sub-clauses:

- 5.1 Leadership and commitment — Senior management must actively lead the AI Management System by aligning it with the organization’s strategy, providing resources, promoting good AI practices, and driving continual improvement.

- 5.2 AI policy — Senior management must approve an AI Policy that fits the organization’s purpose, guides AI objectives, ensures compliance, promotes continual improvement, and is clearly communicated.

- 5.3 Roles, responsibilities and authorities — Senior management must clearly assign and communicate who is responsible for managing the AI Management System and for reporting its performance.

Clause 6 Planning

Clause 6, called “Planning,” focuses on managing risks, defining AI objectives, and managing change because of AI.

Sub-clause 6.1, called “Actions to address risks and opportunities,” is quite lengthy, and it has another four subclauses:

- 6.1.1 General — The organization must identify and manage AI-related risks and opportunities to ensure its AI Management System works effectively, prevents problems, improves over time, and keeps records of all actions taken.

- 6.1.2 AI risk assessment — The organization must have a clear and consistent process for assessing AI risks and to keep records of the results: identifying what could go wrong, how serious it could be, how likely it is to happen, and which risks need action.

- 6.1.3 AI risk treatment — The organization must create and document a clear plan for handling AI risks, which includes choosing suitable controls, checking them against a list of controls from Annex A, adding any missing ones, and getting management approval for how significant risks will be managed.

- 6.1.4 AI system impact assessment — The organization must assess and document how its AI system could affect people and society, considering its use or misuse, and use those insights to improve its AI risk management.

Here are the remaining sub-clauses of clause 6:

- 6.2 AI objectives and planning to achieve them — The organization must set clear, measurable AI objectives that align with its AI Policy, plan how to achieve them, assign responsibilities, track progress, and keep records of the results.

- 6.3 Planning of changes — The organization must make any changes to its AI Management System carefully and in a planned way.

Clause 7 Support

Clause 7, called “Support,” covers resources, competence, training, awareness, communication, and management of documents and records.

It has five sub-clauses:

- 7.1 Resources — The organization must ensure it has all the necessary people, tools, and resources to set up, run, maintain, and continually improve its AI Management System.

- 7.2 Competence — The organization must ensure that everyone involved in its AI work has the right knowledge, skills, and experience — and keep records proving their competence.

- 7.3 Awareness — Everyone involved in the organization’s AI activities must understand the AI Policy, know how their work supports the system’s success, and be aware of the consequences of not following its rules.

- 7.4 Communication — The organization must define what, when, how, and with whom it will communicate about its AI Management System.

- 7.5 Documented information — The organization must create, manage, and protect all documents and records needed for its AI Management System, making sure they’re accurate, up to date, properly approved, and accessible when needed.

Clause 8 Operation

Clause 8, called “Operation,” specifies requirements for operational planning and control over the AI system lifecycle.

It has four sub-clauses:

- 8.1 Operational planning and control — The organization must plan and control all processes needed to run its AI Management System, apply and monitor AI-related controls, fix problems when results fall short, manage any changes carefully, and control any externally provided processes.

- 8.2 AI risk assessment — The organization must regularly assess risks related to its AI systems, particularly in cases of major changes, and keep records of those assessments.

- 8.3 AI risk treatment — The organization must carry out and regularly check its AI Risk Treatment Plan, update it if new or unresolved risks appear, and keep records of all actions taken.

- 8.4 AI system impact assessment — The organization must regularly assess how its AI system impacts people and the organization (especially after major changes) and keep records of those assessments.

Clause 9 Performance evaluation

Clause 9, called “Performance evaluation,” sets requirements for monitoring, measuring, analyzing, and evaluating AI systems’ performance, conducting internal audits, and performing management reviews.

It has three sub-clauses:

- 9.1 Monitoring, measurement, analysis and evaluation — The organization must regularly measure and analyze how well its AI Management System performs, using reliable methods and keeping records of the results.

- 9.2 Internal audit — The organization must regularly perform internal audits to check if its AI Management System follows the rules, works effectively, and is properly maintained, while keeping clear records of how audits are planned, done, and reported.

- 9.3 Management review — Senior management must regularly review how well the AI Management System is working, consider any changes or problems, decide on improvements, and keep records of these reviews.

Clause 10 Improvement

Clause 10, called “Improvement,” covers corrective action and continual improvement of the AIMS.

It has two sub-clauses:

- 10.1 Continual improvement — The organization must keep making its AI Management System better over time so it stays effective and fit for its purpose.

- 10.2 Nonconformity and corrective action — When something goes wrong in the AI Management System, the organization must fix the problem, find out why it happened, make sure it won’t happen again, check that the fix works, and keep records of everything done.

Annexes A to D

There are four annexes in this standard:

- Annex A Reference control objectives and controls — Lists 38 controls organized into nine sections that describe how to reduce AI risks.

- Annex B Implementation guidance for AI controls — Provides detailed guidance for each control from Annex A.

- Annex C Potential AI-related organizational objectives and risk sources — Provides a list of suggested AI objectives (that could be useful for setting the company’s objectives) and risk sources (that could be useful when identifying risks).

- Annex D Use of the AI management system across domains or sectors — Describes how the AIMS can be used across different industries and how it can be integrated with other ISO management standards.

To learn about the details of ISO 42001, sign up for this free ISO 42001 Foundations Course — it will give you a detailed overview of each clause from this AI governance standard together with practical examples of how to implement them.

Dejan Kosutic

Dejan Kosutic